Developer experiences from the trenches

Wed 06 July 2016 by Michael Labbe

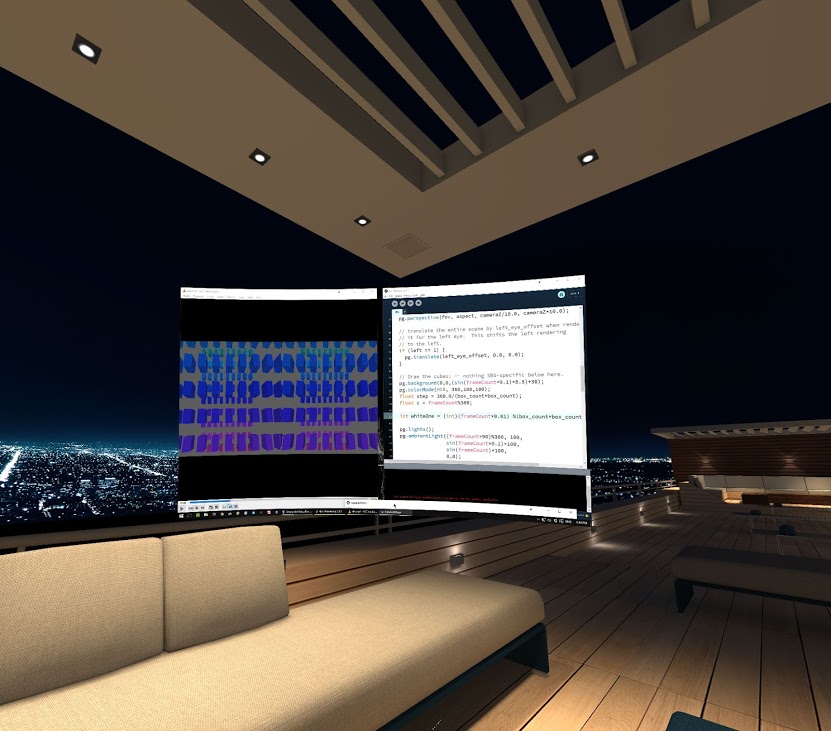

One of the really cool things about social VR is that a little technical know-how can go along way. Recently, I have been exploring social VR through BigScreen VR, an app that lets you see your monitor in the context of a large virtual room, shared by others. Imagine a virtual LAN party and you have a good idea about what’s going on.

Quietly, they dropped an SBS mode into the “Beta Features” menu. All of a sudden, it is possible to socially livecode 3D content in a VR world. And, it’s possible to do this with a top-to-bottom free software stack. How cool is that?

What is Side-By-Side (SBS) content? Check out this Youtube video to immediately get a sense of what is going on. In SBS videos, the image for each eye is encoded using half of the video framebuffer, with a small horizontal offset in the camera position. If you watch this in a side by side video app, each eye only sees the half of the video. Your brain is tricked into thinking it is seeing 3D objects. Leaning side to side even triggers a sense of parallax.

Because BigScreen shares your screen, you can develop software and test it right inside your VR headset. Enter Processing, a free visual coding environment with support for 3D rendering and offscreen framebuffers.

In order to render SBS content in Processing, you simply have to render to two offscreen framebuffers, offsetting them from each other.

Here is sample code with comments that demonstrates exactly how this works. You can copy and paste this into a Processing 3 IDE window and just run it.

If you are simply curious, this YouTube render shows what the Processing sketch looks like.